Utilizing XReco’s latest tool set for Neural Rendering, 3D reconstruction and advanced AI-based video processing pipelines, a novel music video format was developed offering a brand new viewer experience on mobile phones and Apple’s Vision Pro XR headset.

Free Viewpoint Music Video Production

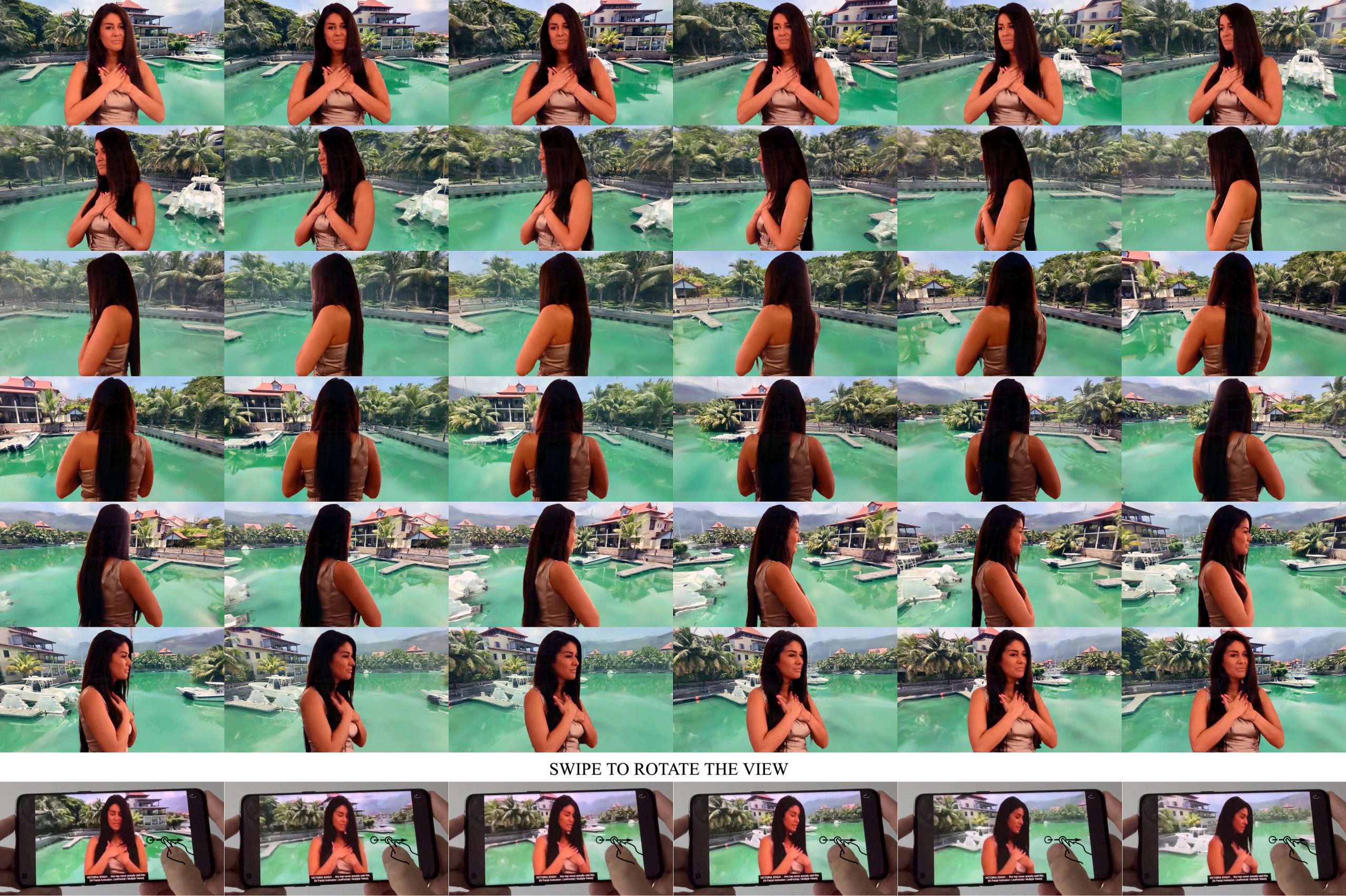

Bullet time shots (well known from the 1999 film The Matrix) are frozen moments in time where the camera creates the illusion of time stopping, while the viewer appears to move around the subject. Free Viewpoint Video (FVV) is a technique that goes beyond the same visual effect, but rather than simply freezing just a particular moment, it offers the freedom to move around and watch content from any angle. To create such a real-time experience playable on mobile and XR devices we developed a custom media player featuring 36 simultaneous views and optimal encoding for compression. Our 2:30 min long music video demonstrates multiple tools and innovations.

Figure 1: 36 rendered views showing an actor interacting with a large-scale, 3D Gaussian Splatting model of a Seychelles harbor (above). Viewers can swipe to rotate the camera and watch content from any angle on their mobile phone (below).

Neural Rendering for Viewpoint Interpolation

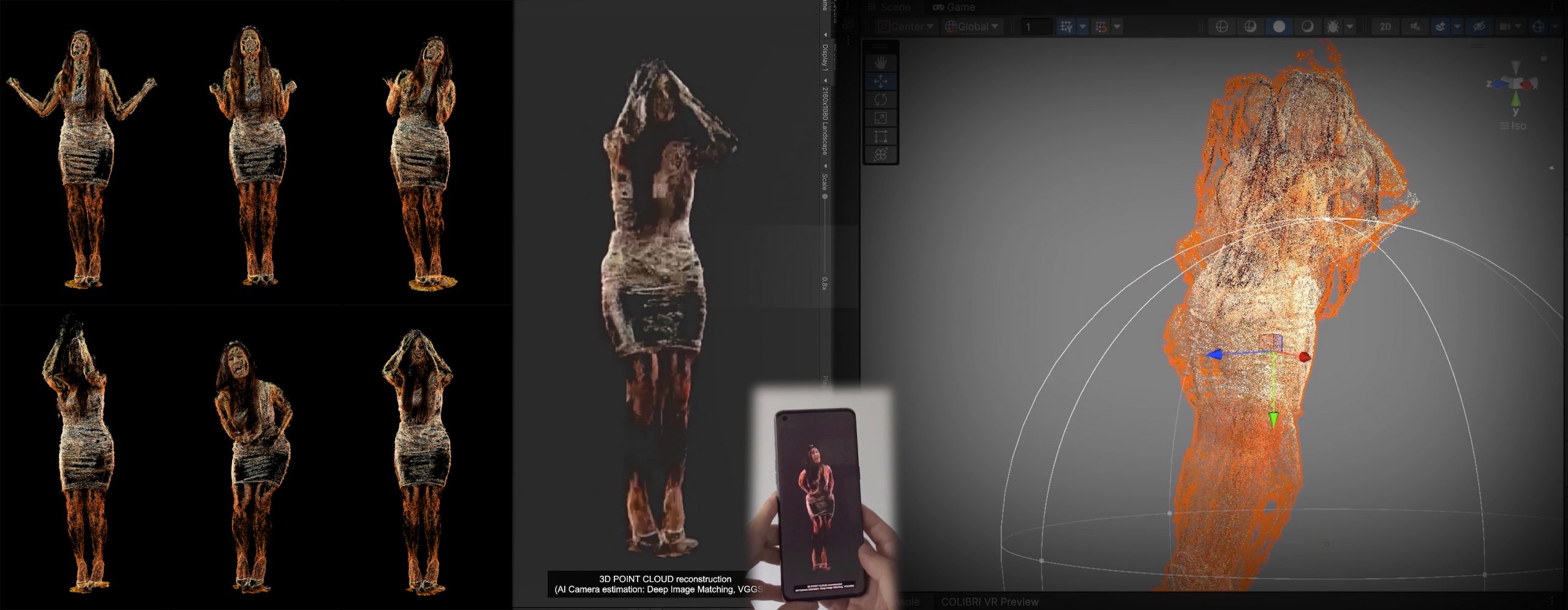

The key elements of our complex production pipeline involve recovering the moving shape of the actor’s body and gestures from the original studio recordings, in combination with large scale reconstructions of outdoor scenery (beaches, harbors, forest, cave), as well as smaller artifacts (seashells, flowers, African masks & statues) captured with mobile phones and consumer 360º cameras Specifically, we first reconstructed point clouds by recovering camera motion and estimating imaging parameters, before subsequently deploying 3D Gaussian Splatting (3DGS), and SfM (Structure-from-Motion) services to create 3D spatial representation of the subjects.

Figure 2: The XReco Unity Authoring tool was used to render multiple full-figure point cloud reconstructions

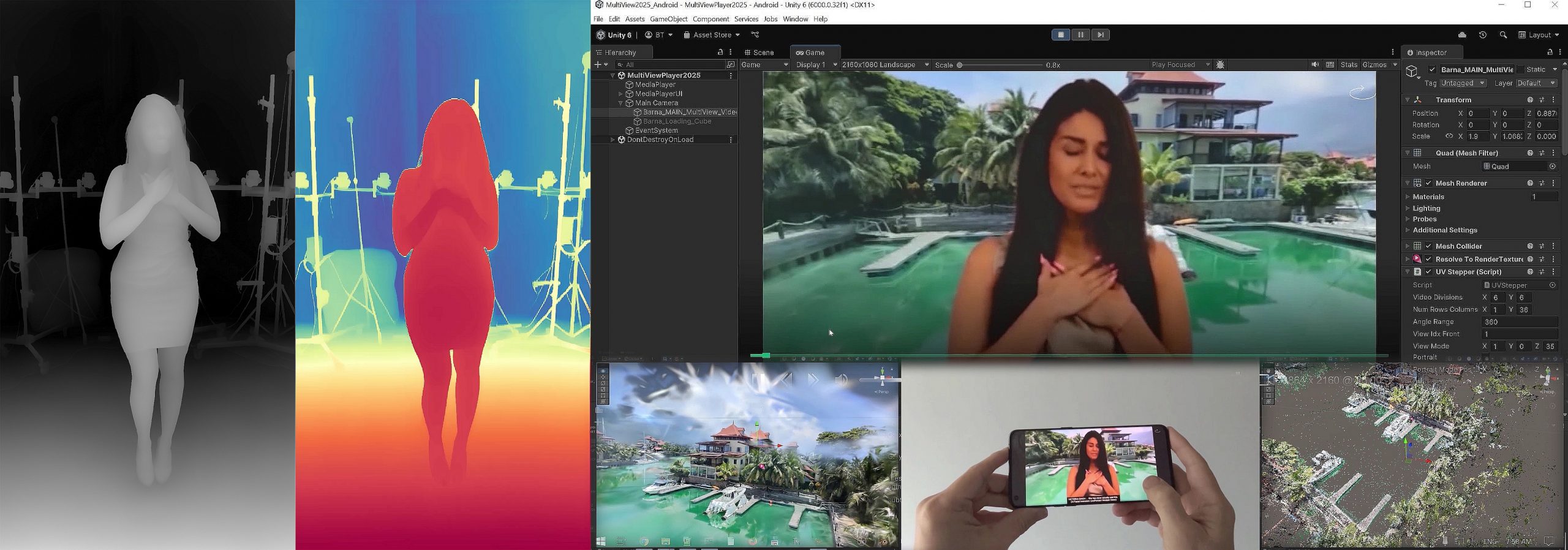

For the captured studio footage, however, the singer’s cluttered background must be removed. This is called mask generation. We achieve this via a combination of AI-based human-matting and depth-estimation algorithms. Finally, we bring these data sets and image sources into our Unity-tool to visualize and combine them in a single unified representation for the purpose of re-rendering the scene from any angle with the help of a directed virtual camera.

Figure 3: By integrating AI algorithms for human matting and depth estimation with the 3D Gaussian Splatting technique, we achieve real-time visualization, dynamic relighting, and high-fidelity rendering within the Unity engine.

Pushing the Limits of Technology

It is easy to see how a 2.5 min short video turned into 1.5 hour length full-scale video production, when we needed to generate 36 different views. In addition, our creative ideas and technology tests called for i) having the actor speak lines not in the original recording, ii) developing animated 3DGS models to create a singing African mask driven by the facial movements tracked consistently across multiple camera views, iii) driving particle effects from the reconstructed moving point clouds, and even iv) to relight the entire 3DGS scene to match the mood of the song in each of the 18 cuts.

Arguably, the future of entertainment lies in delivering immersive personalized experiences

that surround the viewer with spatially situated content and offer interactivity never before possible, and thereby reaching the highest degrees of immersion. Therefore, we ported our MultiView technology to one of the latest such the devices, the Apple Vision Pro. This new device, however, required us to experiment with new ways of user interaction and developing truly spatial interfaces that exists in a home or a work place.

While this work is till in progress, a preview of it (with many other XReco technologies) will be showcased at IBC in Amsterdam during the Fall of 2025.

Figure 4: Apple Vision Pro offers an immersive experience where users can move around the singer and view them from any angle. The platform also includes extra behind-the-scenes videos offering a glimpse into the future of cinema.

Watch the video about the Multiview XR Experience Production.

References to the singer Viki Singh: https://www.singhvikimusic.hu/ and https://www.youtube.com/watch?v=GFguyoiXrvk

FFP´s Work in XReco Project

FFP is a creative-industry partner testing the developed XReco technologies for use in real-world productions and select Use-Cases in the Media, Travel and Entertainment sectors. FFP also advances research on NeRFs, 3D Gaussian splatting, multi-camera capture systems, and Unity-based player development.

About The Author:

Barnabas Takacs, Ph.D. (www.linkedin.com/in/barnabastakacs/)

FFP Productions, Vienna, AT (https://vimeo.com/ffpproductions )

Resources

- References to the singer Viki Singh: https://www.singhvikimusic.hu/ and https://www.youtube.com/watch?v=GFguyoiXrvk

- XReco Blog article “Neural Radiance Fields”: https://xreco.eu/realistic-3d-worlds-with-neural-radiance-fields

Follow XReco!