Learn how vitrivr, our multimedia retrieval frontend, turns user intent into a symphony of relevant results. This open-source tool from the University of Basel is the control panel propelling XReco’s search functionality. Delve into feature representations, vector spaces, and the modular architecture that defines vitrivr.

Navigating a Wealth of Multi-Modal Assets

The XReco toolchain relies on a cornucopia of assets that are provided by various partners through the XR marketplace and that can be used to build attractive and interactive, multi-modal XR content. These assets can range from simple images to videos and even 3D meshes or point clouds.

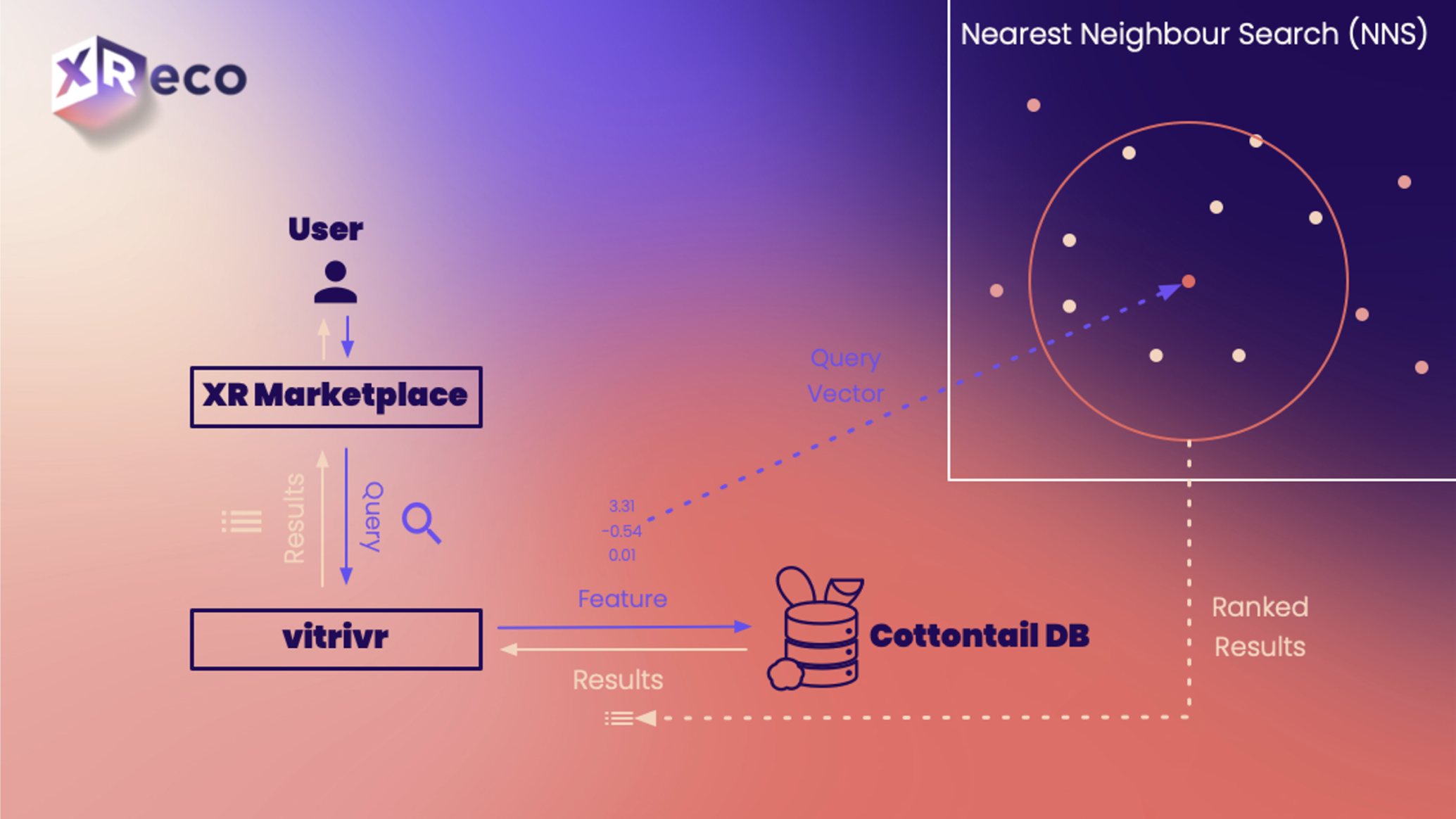

During the build process, a XReco user will rely on search functionality that allows them to find assets that are relevant for their project. The ability to find such assets in the large haystack, is the main task of the search and retrieval backend, provided by vitrvir, which translates a user’s search intent into a list of relevant results. This is illustrated in Figure 1 .

Figure 1 : Similarity search in vitrivr and its integration into XReco's XR marketplace.

What is vitrivr?

vitrivr is a multimodal, multimedia retrieval system developed at the Databases and Information System (DBIS) group at the University of Basel in Switzerland (see [1]). This piece of software allows its users to search and find (= retrieve) items in large and mixed multimedia collections. Large parts of XReco’s multimedia retrieval backend will be based on vitrivr’s technology.

Ever since its conception, vitrivr has been built with modularity and extensibility in mind. It can therefore be extended in terms of the types of queries that it can accept as well as the machine-readable representations it can work with.

vitrivr is open-source software and freely available both to users as well as contributors.

More information can be found in [2] and [3]. If you wish to see vitrivr in action, we gladly refer to this video: https://youtu.be/ODJGv0DU6WQ

How does multimedia retrieval work?

Most multimedia retrieval systems work with representations of the original assets called descriptors or features. These features are simplified (as in, “easily processable by a computer”) representations that describe a specific aspect of the media asset. Very often, features are represented and stored as vectors in a high-dimensional vector space, similar, to how we would describe a point on a 2-dimensional plane using coordinates (just with many more dimensions). That is, every asset is represented by one such point in this space (also called feature space or embedding space).

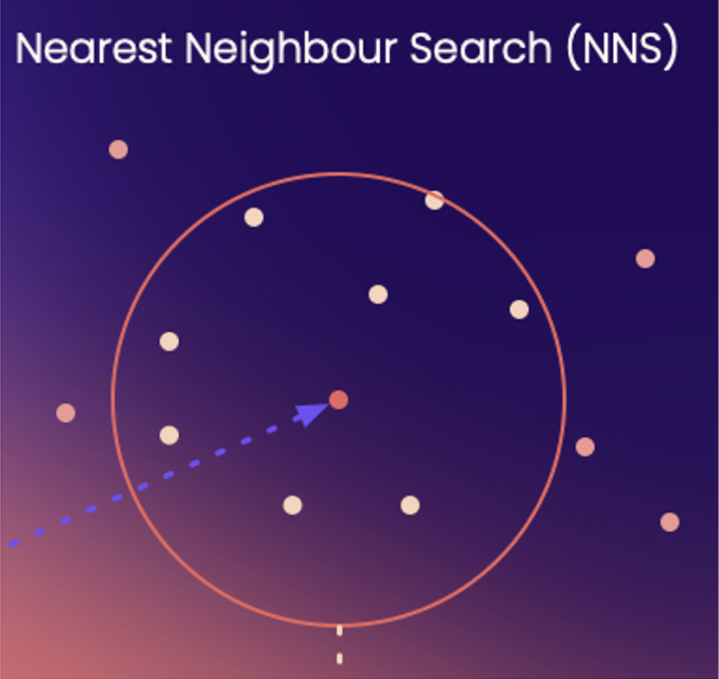

Using this feature representation, finding similar items given a query can be expressed as finding the points in the feature space that are closest to the query vector given some distance measure (e.g., the Euclidean distance). This type of similarity search is called nearest neighbour search or proximity-based search since it associates similarity with proximity. The principle is also illustrated in Figure 2 .

vitrivr and its components facilitate this type of search. It also allows for a combination of similarity search with the more traditional Boolean search paradigm – for which an item must match a query exactly (e.g., find items that exceed a specific size). Furthermore, vitrivr can work with any type of feature that can be represented as a vector and is completely extensible in that regard. This is particularly attractive for the XReco project, since different partners contribute with specialized feature representations for the different types of assets we work with.

How is a user query being processed?

Given the basic data and query model, a user query can then be processed by undergoing three steps. In vitrivr, each of these steps is handled by a different component.

Query Formulation: Users of a retrieval system must be able to express their search intent. Often, these are just textual inputs (e.g., keywords). However, in multimedia retrieval, other modes of operation are possible. Examples include (but are in no way limited) to query by example (e.g., an example image), query by sketch (e.g., a sketched input of a 2D image) or even more exotic modes, such as, query by humming (e.g., to search for a familiar song). This formulation step is handled by the different vitrivr user-interfaces – a web-based one and a VR search system.

Query Translation: The query input created by the user must then be translated into a feature representation that can be processed. This is the conversion is handled by the middleware Cineast, which delegates this to one of the many different feature modules that know, for a particular implementation, how this translation takes place. Cineast (see [4]) then uses the resulting representation(s) to orchestrate the database lookup.

Database Lookup: Once a representation has been obtained, the nearest neighbour search must take place in the data collection. In vitrivr, we use a specialized database called Cottontail DB (see [5]), that is geared towards these types of proximity-based searches. The search can either be executed in a brute-force fashion, by obtaining the distance between query and all the vectors in the database or using specialized index structures.

The resulting items are then ranked and sent back to the user interface for presentation. Since vitrivr has been built to be modular, any of the components can of course be adapted and replaced, which is an advantage for the XReco project, which will rely on its own user interface.

How can multi-modality be achieved?

The term “multi-modality” in the context of multimedia retrieval can mean different things, which is why we make a distinction here.

Multi-modality as in “Searching Different Types of Media”: The ability to search different types of media using the same feature representations is basically a data modelling problem. Many features inherently work for different media types. For example, one can use a feature that describes an image to also describe a key-frame of a video. In vitrivr, we use (customizable) segmentation and representative assets to handle the different media types. For example, a video is not processed in its entirety but in chunks of segments that are internally similar and that can be retrieved individually.

Multi-modality as in “Combining Different Types of Features”: The ability to combine different types of features to search for different aspects in combination (e.g., a description of the imagery and the audio in a video) can be achieved by (multi-modal) fusion. This is a stage typically following the nearest neighbour search of the individual features, and the stage combines the individual distances into a combined score. Over the years, many techniques have been proposed for different applications. In vitrivr, we support late fusion by assigning weight (= importance) to individual features used in a query.

Using Common Features for Different Modalities: Having a single, common embedding space for different modalities, is a more recent development facilitated by advances in machine learning. Such techniques typically involve a neural network that, given an input of different modalities (e.g., an image and textual descriptions thereof), produce a common output, i.e., vectors that are close to one another. Such co-embeddings can then be used, for example, to search for image content using an arbitrary, textual description. In vitrivr, we have used visual-text co-embeddings for several years now (see [6]) with great success.

Conclusion

The ability to search in large multimedia collections is fundamental to reach the goals set by the XReco project. Therefore, XReco is a perfect use-case to put the multimedia retrieval system vitrivr to the test. In the same vein, the applications foreseen by the project also greatly benefit research in multimedia retrieval, with contributions to handling novel types of assets (e.g., point clouds and NeRFs), using new types of features that exploit multi-modality and all this at an even larger scale than ever before. We are therefore very excited about the future of vitrivr and the XReco project and we are looking forward to the contributions that we can make. Stay tuned!

Further Resources

[1] DBIS website – https://dbis.dmi.unibas.ch/

[2] vitrivr website – https://vitrivr.org/

[3] Organisation on GitHub – https://github.com/vitrivr

[4] Rossetto, Luca, Ivan Giangreco, and Heiko Schuldt. "Cineast: a multi-feature sketch-based video retrieval engine." 2014 IEEE International Symposium on Multimedia. IEEE, 2014.

[5] Gasser, Ralph, et al. "Cottontail DB: An open source database system for multimedia retrieval and analysis." Proceedings of the 28th ACM International Conference on Multimedia. 2020.

[6] Spiess, Florian, et al. "Multi-modal video retrieval in virtual reality with vitrivr-VR." International Conference on Multimedia Modeling. Cham: Springer International Publishing, 2022.

Follow XReco!