Explore the revolution in immersive video technology with “FVV Live: Free Viewpoint Video System,”. Developed by the Grupo de Tratamiento de Imágenes (GTI) of the Universidad Politécnica de Madrid (UPM), this groundbreaking technology will transform entertainment and beyond. Discover how this cost-effective, real-time system is breaking barriers in how we experience video, offering limitless perspectives and possibilities for engagement.

Unleash FVV Live: The Future of Immersive Video

FVV Live (Free Viewpoint Video) is an exciting innovation in the world of technology and entertainment that is transforming the way we experience videos and live events.

FVV Live is not only revolutionizing the entertainment industry but also holds unlimited potential in areas such as advertising, education, and product presentations, providing businesses with the opportunity to engage more impactfully with their audiences and customers.

Redefining Real-Time Visual Experiences

FVV Live is a groundbreaking end-to-end free viewpoint video system designed to operate at low cost and in real-time, based on standard components (see Figure 1). The system is engineered to produce high-quality video from the viewer’s perspective using consumer-grade cameras and hardware, thereby reducing deployment costs and simplifying setup for complete three-dimensional environments.

Figure 1 Overview of the capture set-up

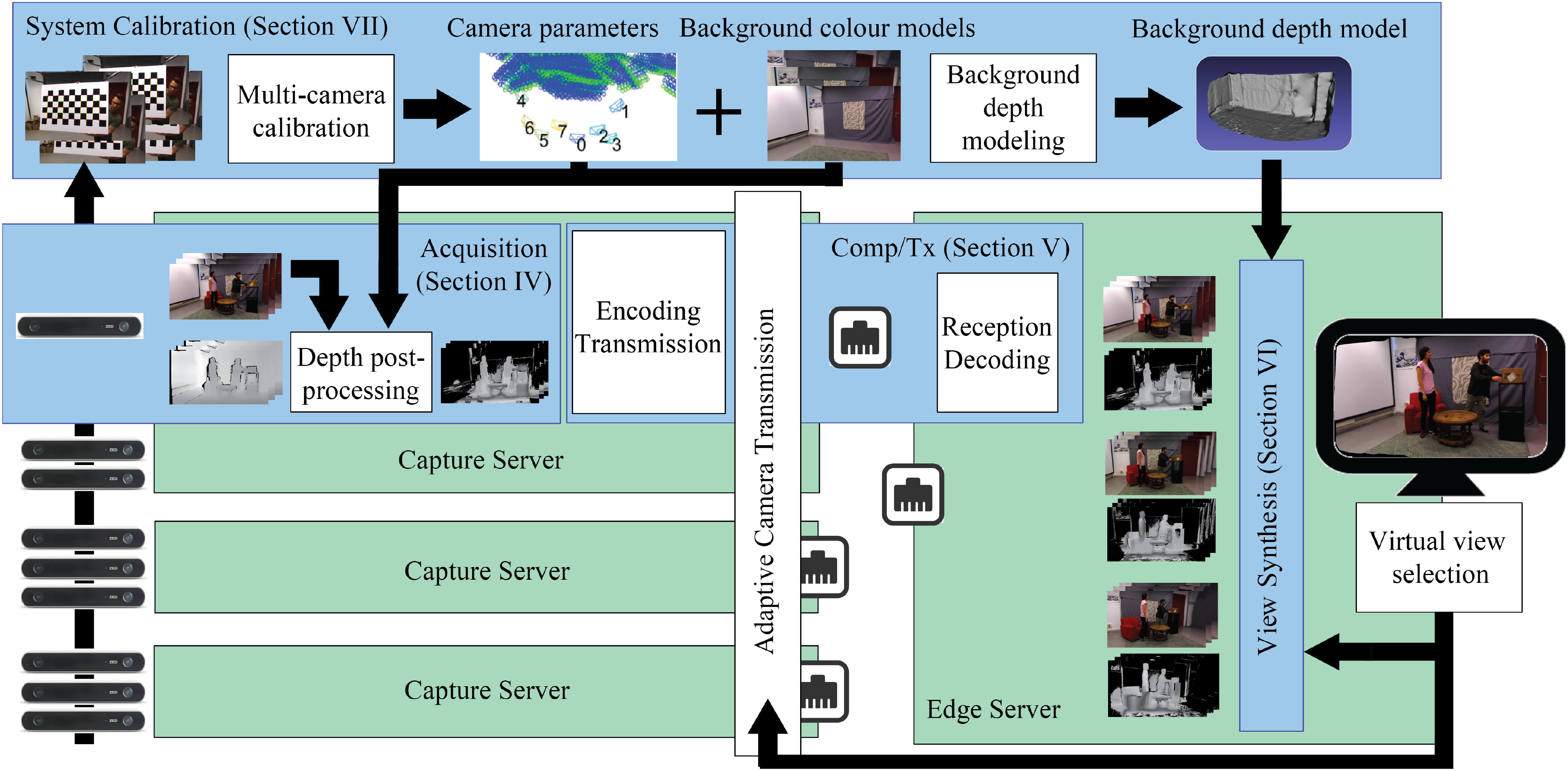

The system architecture (see Figure 2). includes the acquisition and encoding of multi-view and depth data on multiple capture servers and the synthesis of virtual views on an edge server. All system blocks are designed to overcome limitations imposed by hardware and network, which directly affect depth data accuracy and, consequently, the quality of virtual view synthesis. FVV Live’s design allows for an arbitrary number of cameras and capture servers.

Figure 2. Block diagram of the FVV Live system

The first stage of the FVV system is the capture of video sequences of the scene. We use Stereolabs ZED cameras, which estimate depth by purely passive focusing and can therefore be used in conjunction with any number of them at the same time. Figure 1 shows our capture setup with nine ZED cameras. Professional multi-camera setups typically use dedicated hardware clock sources to synchronize frame capture between all cameras, but the ZED camera, which is a consumer device, does not offer this possibility. Each camera is simply connected to the control computer via a USB 3.0 link, so we have developed our own software synchronization scheme using timestamps and a shared clock source, distributed via PTP (IEEE 1588-2002).

Figure 3. ZED camera output: colour (l) and depth (r) frames.

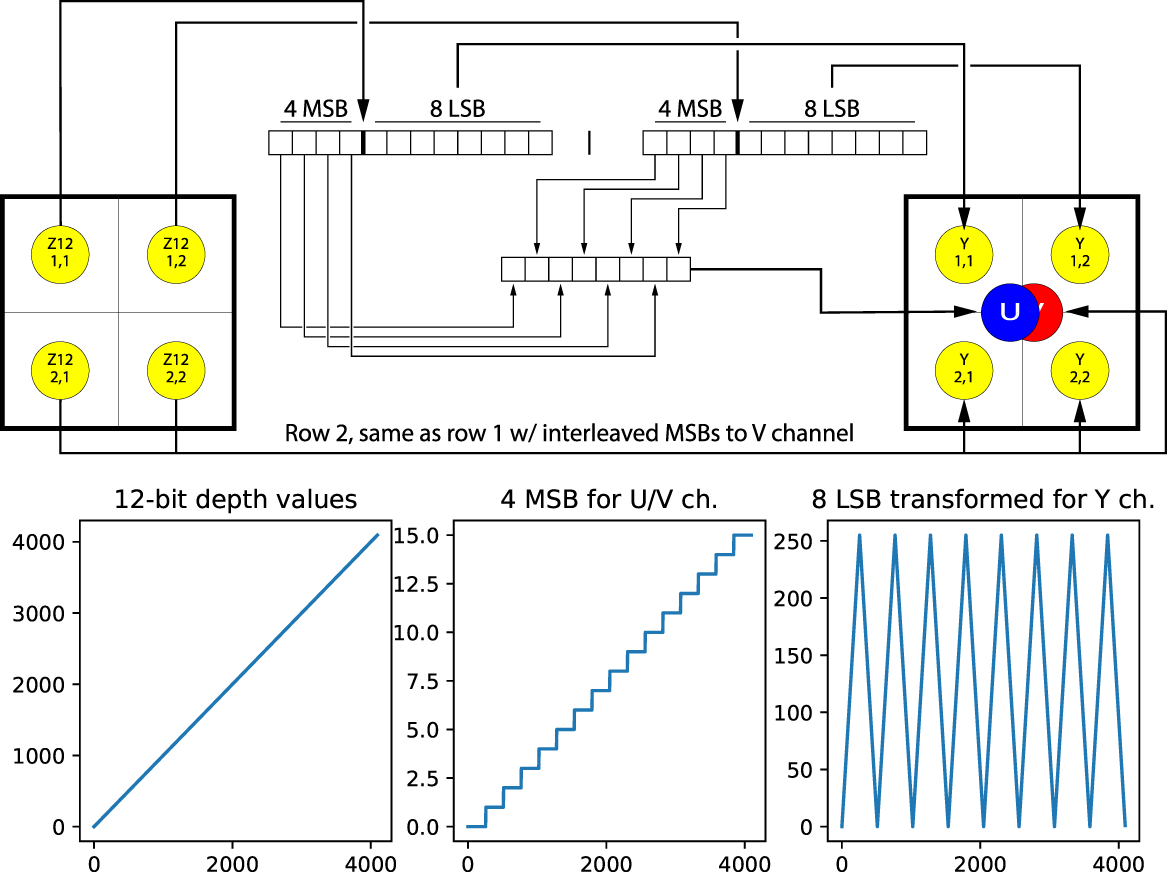

Each camera produces regular images with a corresponding depth estimate (see Figure 3), which is not actually calculated by the camera, but by the GPU software of the control node. The regular image sequence is compressed with standard (lossy) video codecs, which cannot be blindly applied to the depth data. First of all, more than 8 bits per pixel are needed to maintain an adequate reconstruction quality and, moreover, lossy coding schemes are unacceptable because the scene structure would be altered. Therefore, we have adapted a lossless 4:2:0 video codec to carry 12 bits per pixel of depth information by carefully arranging the depth data for each 2 × 2 pixel substructure (see Figure 4).

Figure 4. Adaptation of 2 × 2 pixel cells to 4:2:0 sampling structures to transport 12 bpp of depth data.

A dedicated server receives the video and depth streams from the capture nodes and generates the synthetic view according to the viewpoint and camera orientation selected by the user. In principle, if sufficient computing power and network capacity were available, it would be desirable to use as much information about the scene as possible to compute the virtual view. However, even the most powerful computers cannot deal in real time with problems such as multiple stereo vision, so there is little point in transmitting all available data. Recognizing this fact, and considering that real-time operation is a must for us, we dynamically selected the three reference cameras closest to the virtual viewpoint, warped them toward the virtual viewpoint using DIBR techniques, and blended their contributions to produce the synthesized foreground view.

To reduce the amount of data to transmit and process, we note that the color of the background may change over time due to shadows or illumination changes, but not its structure (depth). Consequently, we can afford to generate a detailed model of the background depth during system calibration (offline) using techniques that are too expensive to be used in real time (e.g., Shape from Motion, Multiview Stereo), so that during online operation we only need to send depth information about the foreground. Finally, we synthesize the virtual view using a combination of layers from the background and foreground information to obtain a natural result with reduced computational cost.

FVV Live features low motion-to-photon and end-to-end latencies, enabling seamless free viewpoint navigation and immersive communications. Furthermore, FVV Live’s visual quality has been subjectively evaluated with satisfactory results, along with additional comparative tests demonstrating its preference over state-of-the-art DIBR alternatives.

Your Gateway to Limitless Possibilities

In conclusion, FVV Live represents an exciting technological advancement that is redefining how we interact with audiovisual content. From XR-enriched broadcasts to more immersive and engaging interactive experiences, this technology offers a revolutionary potential for businesses looking to stand out in a competitive market.

Furthermore, the flexibility of this technology reduces the need for multiple expensive cameras and equipment, saving time and resources for production companies. This technology is breaking barriers and offering exciting opportunities for storytelling and real-time interaction. Get ready to dive into a world of limitless possibilities with FVV Live!

Refs

P. Carballeira, C. Carmona, C. Díaz, D. Berjón, D. Corregidor, J. Cabrera, F. Morán, C. Doblado, S. Arnaldo, M.M. Martín, N. García

“FVV Live: A Real-Time Free-Viewpoint Video System With Consumer Electronics Hardware”

IEEE Trans. Multimedia, vol. 24, pp. 2378-2391, May 2022. (Early Access since May 2021)

Follow XReco!